Some say we will soon have AGI, and some say we won’t. The question of our future AI capabilities is relevant to most, yet many positions seem to depend more on incentive or ideology than evidence.

Why is it that public statements from leaders at model providers are so optimistic about how today’s models can scale? Many point to scaling laws, but I’m not sure if scaling laws are as predictive or straightforwardly optimistic as many claim.

I’ll show how naive extrapolations of some scaling laws can even suggest results such as improvements from GPT-4 to a GPT-7 like model (from loose data and compute estimates for such a model) being the same magnitude as those of GPT-3 to GPT-4.

The implications of future models are far-reaching, and we need better discussions about predicting their capabilities.

Scaling laws aren’t as predictive as you think

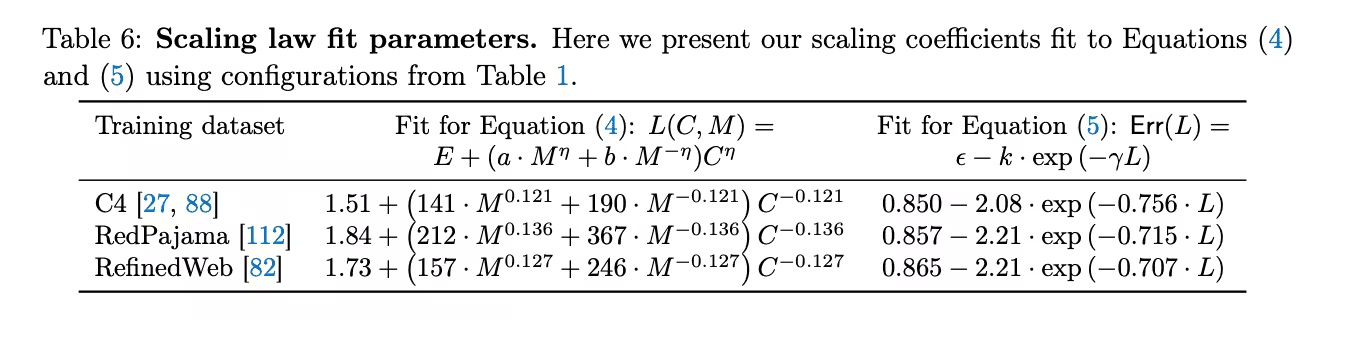

Many claim that if you look at these scaling laws and extrapolate, it’s clear that we’ll see massive gains by simply scaling up existing models. Scaling laws are quantitative relationships that relate model inputs (data and compute) to model outputs (how well the model predicts the next word). They’re created by plotting model inputs and outputs at various levels on a graph.

But using scaling laws to make predictions isn’t as easy as people claim. To begin with, most scaling laws (Kaplan et al, Chinchilla, and Llama) output how well models predict the next word in a dataset, not how well models perform at real-world tasks. This 2023 blog post by a popular OpenAI researcher describes how “it’s currently an open question if surrogate metrics [like loss] could predict emergence … but this relationship has not been well-studied enough …”

Chaining two approximations together to make predictions

To fix the above issue, you can fit a second scaling law that quantitatively relates upstream loss to real-world task performance, and then chain the two scaling laws together to make predictions of real-world tasks.

Loss = f(data, compute)

Real world task performance = g(loss)

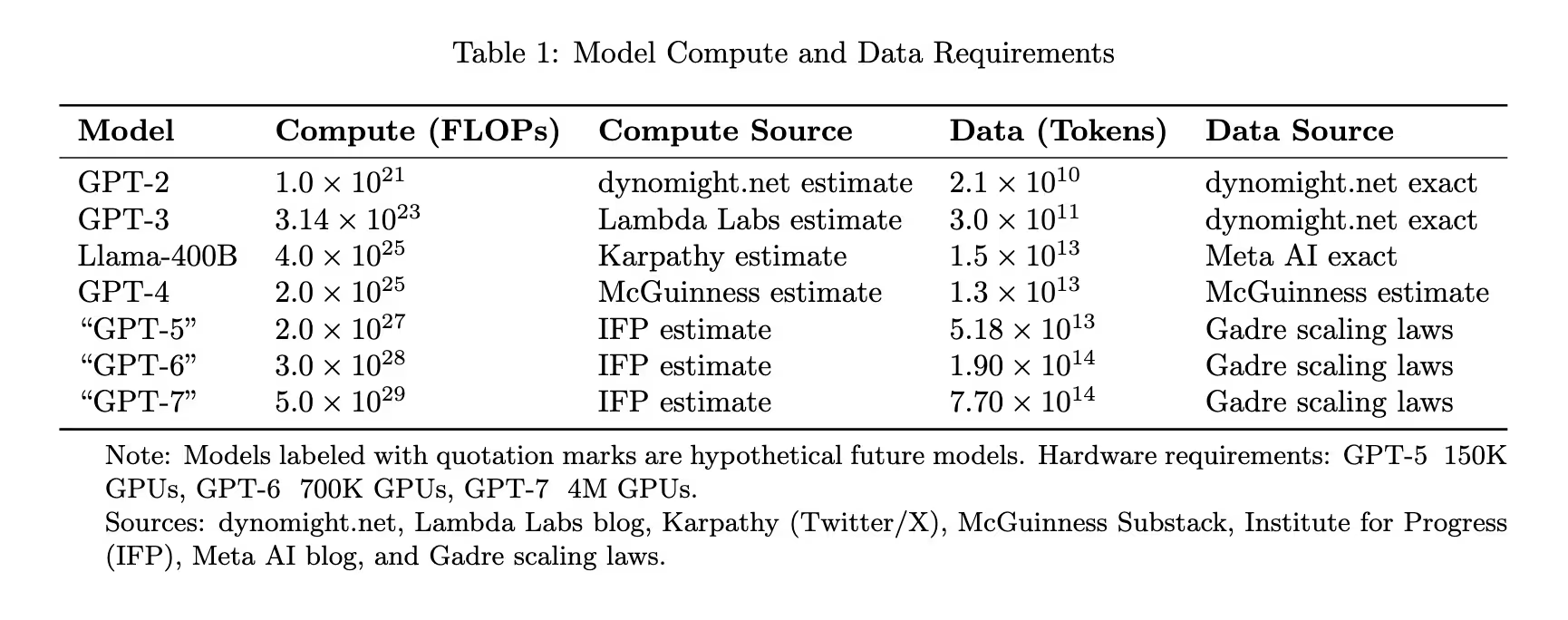

Real world task performance = g(f(data, compute))In 2024, Gadre et al and Dubet et al introduced scaling laws of that sort. Dubet uses this method of chained laws for prediction and claims that its predictive ability “extrapolates [well] over four orders of magnitude” for the Llama 3 models.

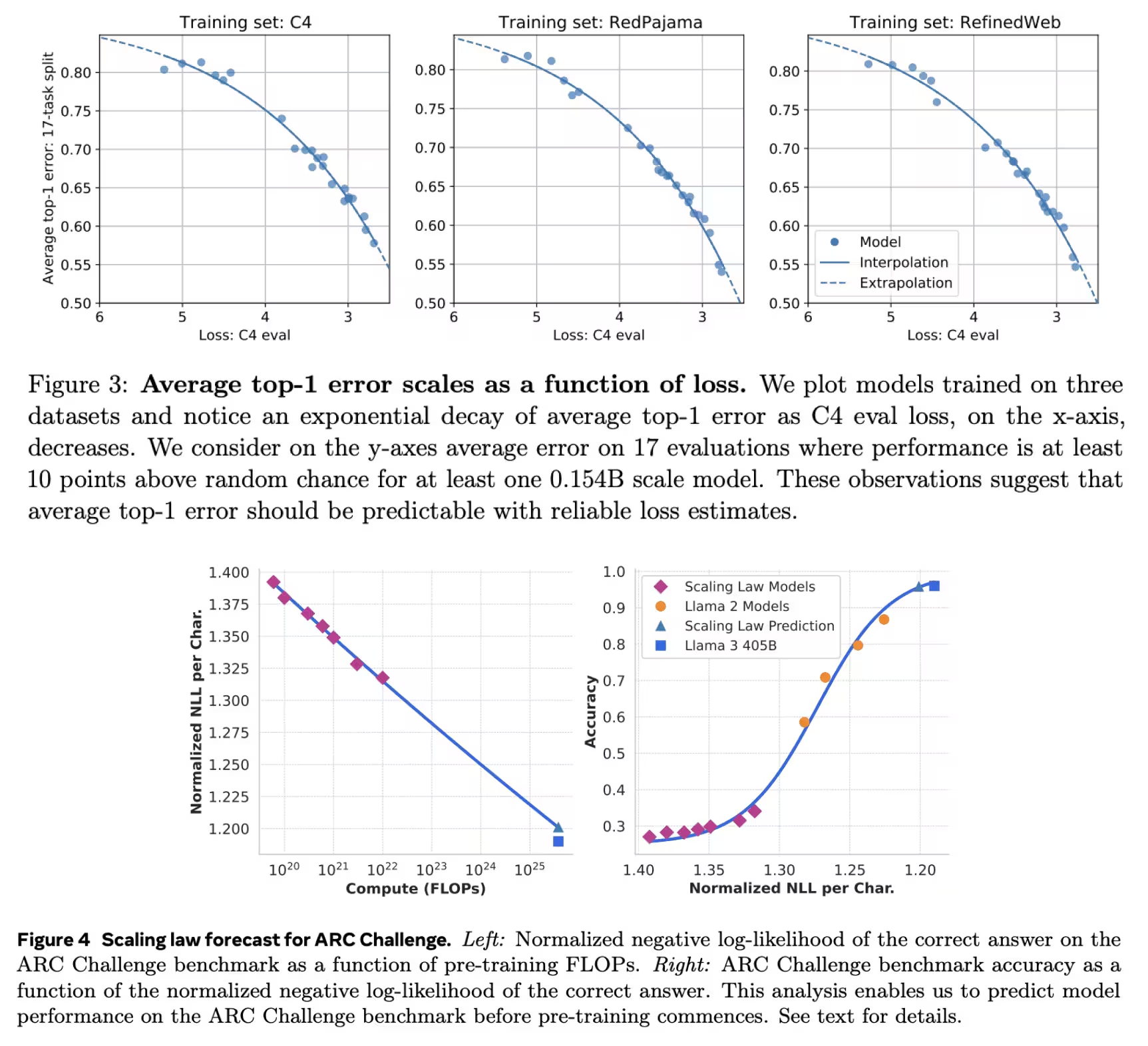

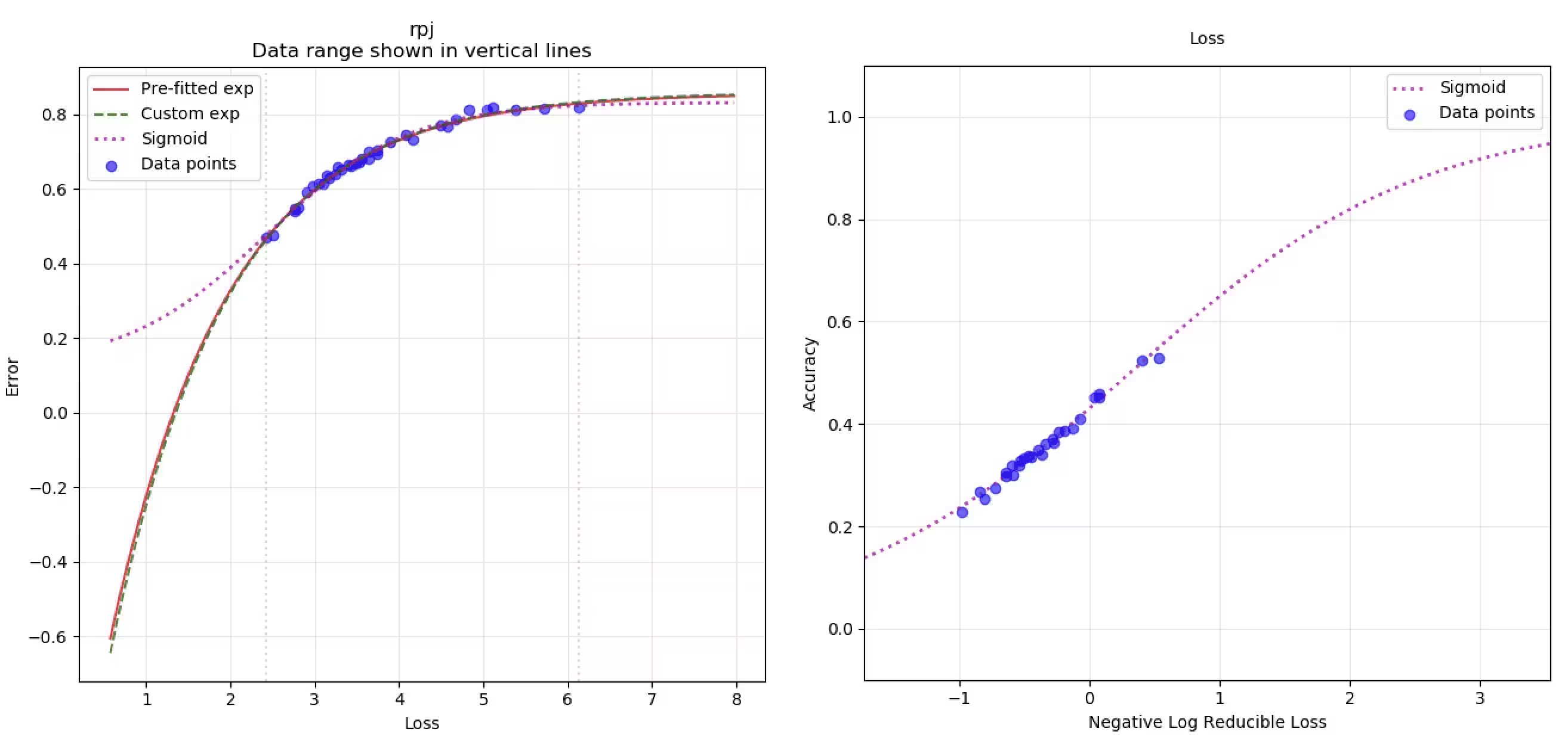

However, work on these second scaling laws is nascent, and with so few data points, choosing a fitting function becomes a highly subjective judgment call. For example, in the figures below, Gadre assumes an exponential relationship across an average of many tasks (top) across many tasks, and Dubet assumes a sigmoidal relationship for a single task (bottom) for the ARC-AGI task. These scaling laws are also highly task-dependent (see Mosaic).

Without a strong hypothesis for the relationship between loss and real-world task accuracy, we don’t have a strong hypothesis for the capabilities of future models.

A shoddy attempt at using chained scaling laws for predictions

What would happen if we were to use some of these naively chained scaling laws to perform predictions anyways? Note the goal here is to show how one can use some set of scaling laws (Gadre) to obtain a prediction, rather than obtain a detailed prediction itself.1

To start, we can use publicly available information to estimate the data and compute inputs2 for the next few model releases. We can take announcements of the largest data center build-outs, estimate the expected resulting compute based on their GPU capacity, and map them onto each successive model generation.3

Then, we can use scaling laws to estimate the amount of data these clusters would need. The largest cluster estimate from the Institute for Progress would ideally train on 770T tokens to minimize loss according to the scaling laws we’re using—a few times larger than the size of the indexed web.4 That seems challenging but feasible, so let’s just use that for now.

See link to Google Sheet of data and compute estimates5

See link to Google Sheet of data and compute estimates5

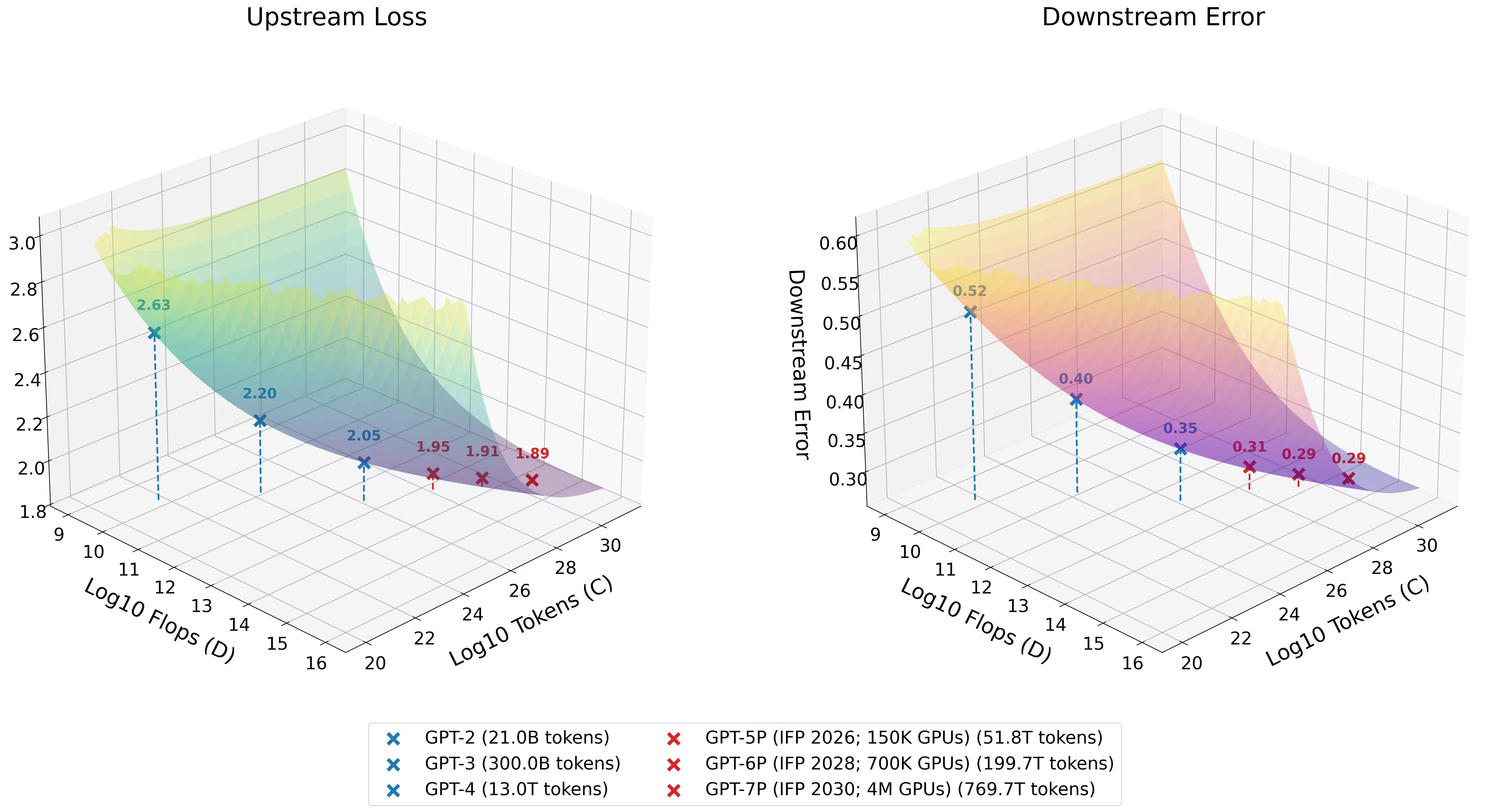

Finally, we can plug these inputs into chained scaling laws and extrapolate. The right plot is what we’re interested in. It shows real-world task performance on the vertical axis, plotted against the inputs of data and compute on the horizontal axes. Blue points represent performance on existing models (GPT-2, GPT-3, etc), while red points are projections for the next scale-ups (GPT-5, GPT-6, GPT-7, etc):

See link to Github repository for chained Gadre scaling laws and resulting plots

See link to Github repository for chained Gadre scaling laws and resulting plots

The main takeaway is that the scaling laws we used predict dramatically diminishing returns in performance from GPT-4 onwards. The predicted improvement in measured real-world tasks, from GPT-4 all the way up to a GPT-7 style model (~25000X more compute), would be similar to the predicted improvement just from GPT-3 to GPT-4 (~100X more compute).

Are we getting close to the irreducible loss?

If you look at the left plot, the issue with these scaling laws is that we’re approaching the irreducible loss.6 The irreducible loss is closely related to the entropy of the dataset, and represents the theoretical best performance a model can reach on that dataset. With Gadre scaling laws on RedPajama, if the best possible model can only reach an irreducible loss of ~1.84, and we’re already at ~2.05 with GPT-4, there’s not that much room for improvement.7

However, most labs have not been releasing the loss values for their most recent frontier model training runs, so we don’t know how close we actually are to the irreducible loss.

Subjectivity in fitting functions and the limits of our data

As mentioned previously, the choice of fitting function on the second scaling law is highly subjective. For example, we could refit the loss and performance points from the Gadre paper using a sigmoidal function instead of an exponential one:

See link to Github repository for sigmoidal fit on Gadre data and resulting plots

See link to Github repository for sigmoidal fit on Gadre data and resulting plots

Yet, the conclusion remains largely unchanged. Comparing the exponential fit (red line) with our custom sigmoidal fit (dotted purple line) in the left graph, the limitation is clear: we simply don’t have enough data points to confidently determine the best fitting function for relating loss to real-world performance.

No one actually knows how strong the next models will be

There are obviously many ways to improve the above “prediction”: using better scaling laws, using better estimates of data and compute, and so on.8 The point of the above exercise is more to show the amount of uncertainty baked into these predictions than to perform an accurate prediction.

Ultimately, scaling laws are noisy approximations, and with this chained method of prediction, we’re coupling two noisy approximations together. When you consider that the next models may have entirely different scaling laws, as a result of different architectures or data mixes, no one really knows the capabilities of the next few model scale-ups.

Then why are model providers so optimistic about naive scaling?

Major cloud companies and others tend to be very optimistic about scaling today’s models:

- “Despite what other people think, we’re not at diminishing marginal returns on scale-up,” Scott said. “And I try to help people understand there is an exponential here.” — Kevin Scott, Sequoia video interview (2024)

- “they found that the A.I. didn’t just get better with more data; it got better exponentially… he still believes A.I. is on an exponential growth curve, following principles known as scaling laws…” — Ezra Klein, The New York Times (2024)

Some attribute this optimism to commercial incentive, but I think it comes from a combination of: (1) labs potentially having access to more optimistic internal scaling laws, (2) first-hand experience of scaling working despite widespread skepticism over the past few years, and (3) scaling being a call option:

- “When we go through a curve like this, the risk of under-investing is dramatically greater than the risk of over-investing for us here, even in scenarios where if it turns out that we are over-investing…these are infrastructure which are widely useful for us,…” – Sundar Pichai (2024)

- “I’d much rather over-invest and play for that outcome than save money by developing more slowly…. Meaningful chance a lot of companies are over-building now… the downside of being behind leaves you out of position for the most important technology over the next 10-15 years” – Mark Zuckerberg (2024)

We need better conversations around future AI capabilities

I don’t think extrapolating scaling laws is as straightforward as many claim, so I decided to share my own naive efforts here since I couldn’t easily find any myself. My main takeaways are: (1) most current discussions around predicting AI capabilities aren’t high quality and (2) public scaling laws tell us very little about the future capabilities of scaling existing models.

For the public to effectively evaluate whether today’s AI models can scale, we’ll need to see more evidence-based predictions and better evaluation benchmarks. If we knew the capabilities of future models, we could prioritize preparing for those capabilities – building out bio-manufacturing capabilities in preparation for a research revolution in biology, creating upskilling companies in preparation for labor displacement, and so on.

I’m personally optimistic about the progress of AI capabilities because of the talent in the field. However, AI scaling isn’t as deterministic as people think, and no one really knows what the next few years of AI build outs will reveal.

Thank you to Celine, Matthew, Jasmine, Coen, Shreyan, Nikhil, Trevor, Namanh, and Gabe for their invaluable feedback; Justin for his help with scaling laws; and Bela, Susan, and Maxwell for ‘encouragement.’

November 30, 2024 update: I changed the data and compute estimates to IFP estimates after Miles’ note instead of using my own compute estimates.

Notes

-

There are many potential issues with this approach, including the choice of tasks, the assumed function for modeling loss to error, the number of data points used for the fit, not considering the different quality of training tokens etc. ↩

-

“I disagree with your methods on predicting the inputs (data and compute) for the next few model releases. You’re massively underestimating or overestimating the compute we’ll get from data center build-outs, distributed training, and GPU improvements, as well as the data we’ll get from leveraging multi-modal data and synthetic data. Why don’t you replot with those considerations?” With Gadre’s scaling laws, I don’t think it really matters if you increase compute or data by another few magnitudes. If you plot out the error surface, it still stagnates. We’re very close to the irreducible error. ↩

-

Notably, we’re not taking into account potential advances such as cross-data center training ↩

-

I’m using the simplifying assumption that total GPU flops = effective FLOPs (which is an overestimate because clusters will incur communication overhead) ↩

-

I don’t include Llama-400B model in later graphs but include it here for the sake of comparison to GPT-4 input estimates ↩

-

“I don’t understand the irreducible error in Gadre’s scaling laws. Why is the irreducible loss for the C4 dataset lower than the irreducible loss for the RedPajama dataset, when the C4 dataset is a subset of the RedPajama dataset?” Scaling laws fit on a small number of data points are rough approximations. As mentioned above, I think the method we have has several issues, and my larger point is that publicly available scaling laws aren’t trustworthy for predictions multiple orders of magnitude away from their fitted points. ↩

-

It’s possible that the last few gains in loss will lead to extraordinary outcomes (e.g being able to predict every single word is very different than being able to predict 99% of words) but this relates to the point of us not really knowing how to model the relationship between loss and capabilities in a generic way ↩

-

Potential blog: What should you do if you believe in AGI? ↩

Subscribe to Kevin Niechen's blog

Notes and essays on applied AI, venture investing, and building companies. Get new posts in your inbox via Substack, or use RSS.